Responding to the Call of Things

A Conversational Approach to 3D Animation Software

2. Context of practice

As suggested by Manovich (2001; see above, 3D animation practice often seems to involve choosing from ready-made solutions provided by CG researchers. But there are a number of 3D animators and digital artists who do more than simply utilise existing tools and implement standard workflows. Although they use commercial software, the work of these artists moves beyond conventions and visual styles associated with 3D software and with digital media more generally. This section discusses the work of some of these artists and asks, how do they do it? What tools and methods do they use? In order to contextualise the work of these digital artists, this section discusses computer graphics research, Technical Rationality (Schön, 1991), media transparency and orthodox approaches to 3D software.

Early computer graphics research

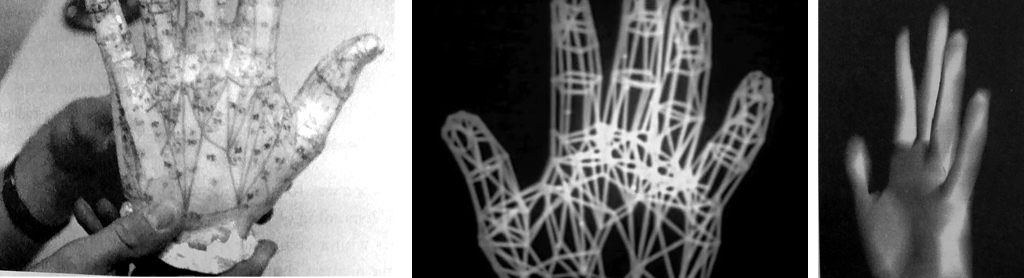

In the early 1970s, before the development of commercial 3D software packages, creating a virtual 3D mesh was a less intuitive and a more technical process than it is today. In those days, 3D modelling involved activities such as calculating coordinates and entering them as text onto a computer. As described above, this was the approach taken by Sutherland and his students while digitising a VW car. It was also the approach taken by Ed Catmull (also a student at the university of Utah) to create a digital model of his own hand (Figure 2.1; Catmull, 2014, p. 14).

Catmull chose to digitise a hand because he wanted to render a complex object with a curved surface (Catmull, 2014, pp. 14–15). After making a plaster cast of his left hand, he drew polygons onto the plaster model. He then measured the co-ordinates defining each polygon and entered that data into a 3D animation program that he had written. His first renders were faceted and “boxy” but with the help of “smooth shading” techniques developed by other students he was able to render a smooth, more organic looking form.

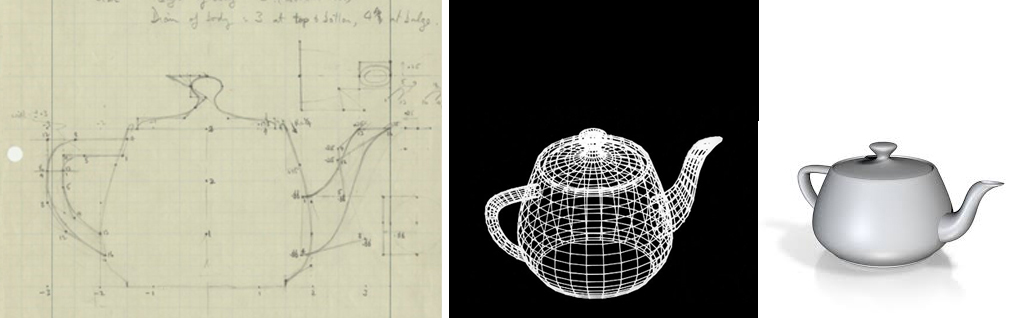

Another method for calculating coordinates was to draw an object onto graph paper. Figure 2.2 shows an original sketch by Martin Newell created during the course of his PhD research project undertaken at the University of Utah in 1975 (computerhistory.org, n.d.). Newell sketched a teapot onto graph paper and then converted the sketch into Bezier curves on a computer. Apparently (computerhistory.org, n.d.; wikipedia.org, n.d-b.) the teapot that Newell sketched was his own, and it seems safe to assume that the teapot was significant to Newell in a variety of ways (e.g. practical, financial and perhaps even emotional). However, in digitising the teapot, Newell was not interested in its personal significance or even its physical proximity; he was interested only in properties that can be described mathematically. Specifically, he was interested in the teapot’s form, i.e. its extension in space.

As a 3D mesh, Newell’s virtual teapot exists in a kind of vacuum, devoid of context. In contrast to the original teapot, the digital model (existing as data) can be easily manipulated; it can be scaled, moved, rotated or deformed and it can also be duplicated and shared. Newell made the dataset publically available and the Utah teapot quickly became a standard for the development of various computer lighting models (wikipedia.org, n.d.-b; simplymaya.com, n.d.).

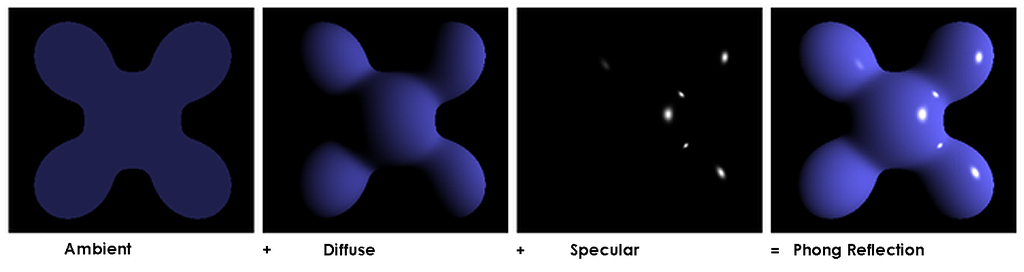

One such algorithmic model was developed in 1975 by Phong Bui Tuong, also a student at University of Utah (Guha, 2015, p. 425; wikipedia.org; n.d.-a). Commonly referred to as Phong shading, this lighting and shading model is based on Phong’s observation that objects with a matte surface (such as a piece of chalk) have a large area of highlight which falls off gradually, while shiny objects (such as a billiard ball) have a smaller area of highlight which is brighter and falls off more acutely (wikipedia.org, n.d.-a). Drawing on his own experience of everyday things, Phong was able to develop a multi-purpose lighting and shading model which can be used to depict a variety of different surface types.

Emergence of commercial software

Throughout the 1970s individuals or teams of CG researchers/practitioners purchased computer hardware and had to develop reusable programs themselves (Carlson, 2003). Due to the cost of hardware and the expertise required, these activities were largely restricted to University and industry-supported research institutions. But innovations by researchers like Sutherland, Newell and Phong were soon converted into marketable products and throughout the 1980s 3D computer graphics software became available to a larger number and greater diversity of users (Carlson, 2003; Guha, 2015, p. 8). By the late 1980s it became possible for users to create 3D graphics with little or no knowledge of the code behind their creations. Phong’s is currently the most widely used lighting model in 3D computer graphics (Guha, 2015, p. 425) and Newell’s teapot dataset is available in many 3D software packages with the click of a button (“Why is there a teapot in 3DS Max? - The origin of common 3D models,” n.d.).

Figure 2.4 illustrates how a Phong reflection model might be used by a contemporary 3D software user to shade ceramic and metallic surfaces. Based on observations of either a real coffee cup or of photographic reference, the user enters values for ambient, diffuse and specular parameters; these are the parameters specified for user input by the algorithm’s designer. The user manipulates values either by dragging a slider or entering numeric values via the keyboard. Test renders are made and values are tweaked until the desired result is achieved.

Contemporary 3D graphics programs offer a wide variety of increasingly complex shading algorithms, and most of them work in a similar fashion: i.e. user-entered values are processed according to predesigned algorithms. The images in Figure 2.4 are sourced from [TurboSquid>As stated on their website, the goal of Turbosquid is to ‘save artists the time of making a great model, and instead let them add their own personality to their creations’ (“Turbosquid,” n.d.).], one of many online mesh repositories. Existing as a 3D dataset, this virtual coffee cup can be downloaded for a fee. It is one of many products available to 3D users who want to select from a library of existing models and textures.

Technical Rationality

CG research continues today as a sub-field of computer science (Guha, 2015, p. 7) and, compared to the early days of computer graphics, today there is a clearer distinction between software developers and software users. This delineation between research and practice resembles philosopher and academic Donald Schön’s description of “Technical Rationality” as outlined in his 1983 book, The Reflective Practitioner (Schön, 1991).

According to Schön, Technical Rationality promotes a model of professional knowledge which considers skillful practice as “instrumental problem solving made rigorous by the application of scientific theory and technique” (Schön, 1991, p. 21). Technical Rationality stems from a positivist epistemology which is characterised by the idea that “scientific knowledge is the only source of positive knowledge of the world” (Schön, 1991, p. 32) and “real knowledge lies in the theories and techniques of basic and applied science” (Schön, 1991, p. 27). In short, Technical Rationality assumes that scientific research can, and should, be the basis for almost all types of intelligent practice. For Technical Rationality, skillful practice means discerning the problem and applying the appropriate available solutions – and this sounds very much like standard approaches to 3D animation.

As a model of professional knowledge, Technical Rationality emphasises the need for standardisation, and it has been used to great effect in many areas of human endeavour including medicine and engineering. However, according to Schön, the model of Technical Rationality can be dangerously reductive because it fails to account for a practitioner’s ability to deal with divergent situations, and in some cases it precludes this ability. Schön points out that practitioners of all persuasions routinely deal with complexity, uncertainty, instability, uniqueness and value-conflict, and a Technical Rationalist model of skillful practice can’t account for this fact. He warns that if practitioners try to operate according to a Technical Rationalist paradigm, then they might be cutting a complex situation to fit prescribed solutions. In this case a practitioner might become “selectively inattentive to data that fall outside their categories” (Schön, 1991, p. 44) or they may “try to force the situation into a mold which lends itself to the use of available techniques” (Schön, 1991, pp. 44–45). Schön’s warnings are relevant to 3D practitioners because we work with solutions designed by CG researchers and software developers. Schön’s thought indicates that if we focus on the correct implementation of standard procedures (i.e. using the right 3D tools in the right way) then we might be cutting a specific practice situation to fit the design of the software. In other words:

By employing a Technical Rationalist model, we might be missing features of a practice situation which are complex, uncertain, unique or unstable.

In the Technical Rationalist paradigm, knowledge is formed by abstracting from particular situations to find general rules. These general rules are then used in practice to deal with specific situations. Researchers such as Newell, Phong and Sutherland study particular phenomena and develop general rules in order to design mathematical computer models. With the practices of these researchers in mind, we can see how the logic of Technical Rationality plays out for developers and users of 3D software. Along with Heidegger, Rushkoff and Manovich, Schön’s warnings suggest that our approach to 3D animation could be levelled (or Enframed) if we implicitly accept a Technical Rationalist paradigm.

One artist who overtly critiques this paradigm is award-winning 3D animator, David O’Reilly.

Back to basics and the low-poly aesthetic

O’Reilley critiques our propensity to blindly accept CG solutions with his 3D software script “ Shitmaker toolkit v1” (O’Reilly, 2016). Available for download from his website, “Shitmaker” is a “free and useless script for Maya” which allows you to “fuck up your favourite 3D models” (O’Reilly, 2016). Like this irreverent tool, O’Reilley’s 3D animation work consistently breaks with convention and uses commercial software in surprising ways.

O’Reilley takes a “back to basics” approach to 3D software and by this I mean that he avoids many of the newer and more complex computer graphics solutions which have been designed to achieve photorealism. For example, O’Reilley avoids lighting and rendering techniques such as subsurface scattering and global illumination. His 2009 film Please Say Something (O’Reilley, 2009), which won the Golden Bear for Best Short Film at the Berlinale, avoids software rendering altogether and is instead created entirely from preview renders. In an essay called “Basic Animation Aesthetics” (O’Reilley, 2010), O’Reilley shares some thoughts regarding the making of this film. He says, “The film makes no effort to cover up the fact that it is a computer animation, it holds an array of artefacts which distance it from reality, which tie it closer to the software it came from” (O’Reilley, 2010, p. 2). As well as exposing the the way the work was created to the viewer, another result of a back-to-basics approach is that the “production pipeline [is shortened] to a bare minimum” (O’Reilley, 2010, p. 3). O’Reilley says that because the process is quicker and less rigid, it is easier to make changes and to improvise.

A 3D mesh often starts as a simple geometric form, but the visual style adopted in most 3D animations hides this fact from the viewer. O’Reilley is one of a number of 3D users who have embraced a simple geometric, "low-poly" aesthetic that doesn't smooth the models to create organic-looking forms – instead leaving them faceted and “boxy”. Artist and animator Eran Hilleli is another proponent of this simple geometric style.

A beautiful example of Hilleli’s work is Between Bears (Hilleli, 2010), an award winning short film created while Hilleli was still a student at the Bezalel Academy of Art and Design. By utilising qualities inherent in 3D animation, Hilleli’s film achieves some of the same qualities as a painting that doesn’t hide its brushstrokes. Specifically, it allows the process of its production to be evident in the final work and it achieves a visual interplay between abstract shapes and figurative (or recognisable) forms. The minimal detail, muted palette, and ambiguous storyline contribute to this film’s poetic and lyrical tone.

There is an increasing interest in the low-poly aesthetic which can also be seen in animations such as Pivot (Megens et al. 2009) and Dropp (Nagatsuka 2011), and in illustrations such as those by Mordi Levi (Levi, 2016), and computer games such as CatDammit! (Fir & Flams, 2014).

Proponents of a low-poly style and a back-to-basics approach utilise qualities inherent in 3D animation software and don’t attempt to hide facts about the way the work was created from a viewer. These strategies can be contrasted with an approach to 3D software which aims to simulate prior media or to simulate photography.

Media transparency

NPR

Whether simulating the look of drawing, painting or photography, many 3D animators aim to hide their production process from the viewer, and many of their creations succeed in looking like they were created using a camera, pencils or a brush. As briefly discussed in the previous section, non-photorealistic rendering (NPR) research develops techniques for rendering 3D images that look like traditional art media such as paint, pencil or pastel.

The short animated film Mata Toro (Mauro Carraro Jérémy Pasquet, 2010) is a successful example of the NPR genre. Each frame of Mata Toro looks like it was created with pencil, watercolour or pastel. It has a hand-made feel which obscures the fact that it was created primarily using 3D animation software. As this piece shows, it is possible for an animation created using digital tools to simulate the inconsistency and texture of pencil or paint on paper. More recent examples of an NPR aesthetic include Disney Studio’s Paperman (Kahrs, 2012) which combines a 3D animation with 2D animation techniques (Oatley, 2013).

There is a small but growing consumer market for animations which present a hand-made aesthetic, but the pursuit of photorealism remains the primary concern in Computer Graphics research

Photorealism

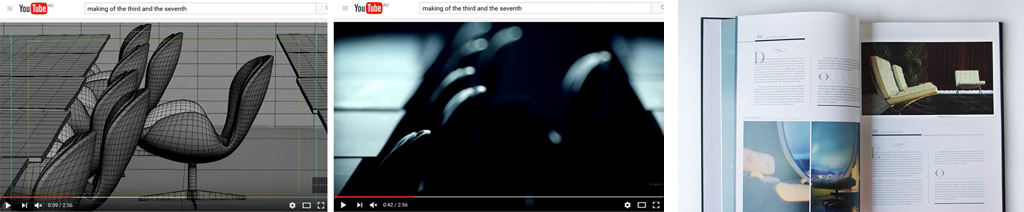

Alex Roman is a 3D animator who creates still images and short films that are indistinguishable from film and photography. The level of detail and precision that Roman has achieved in his highly acclaimed movie The Third and The Seventh (Roman, 2009) represents thousands of hours of work and the results are an aesthetically beautiful piece which could easily be mistaken for live action. It is difficult for a viewer of this film to imagine that, like Hilleli and O’Reilly’s models, many of the virtual objects in this movie started as a simple geometric form which became more complex as Roman added more and more detail.

Roman is one of many CG artists who strives for photorealism and this requires that he adds lots of detail, smooths his models, and uses complex lighting and shading algorithms. His aim is to leave no trace of the production process visible in the finished work. Roman’s approach to his work is consistent with what media theorists David Bolter and Jay Grusin refer to as the ongoing quest for media “transparency” (Bolter & Grusin, 2000).

To speak of a tool or a medium as transparent is to suggest that it (the brush, the painted surface, the software interface or the computer screen) is not explicitly experienced, i.e. that it doesn’t become an object of study.

To speak of transparency implies that the medium is somewhat neutral and doesn’t intervene: that it transparently facilitates, communicates or conveys meaning.

In their 1999 book, Remediation; Understanding New Media, Bolter and Grusin suggest that 3D software can be seen as a continuation of the tradition of representation that began with Renaissance painting (Bolter & Grusin, 2000, p. 21). According to them, it is the quest for media transparency that drives the renaissance painter’s use of pictorial perspective. Instead of making the viewer aware of the painting as an object, the use of perspective transports the viewer into the picture’s represented space. Bolter and Grusin state that, “if executed properly the surface of the painting dissolved and presented to the viewer the scene beyond” (Bolter & Grusin, 2000, p. 25).

As well as perfectly executed perspective, dissolution of a painted surface requires that there are no visible brush strokes. Bolter and Grusin describe how renaissance painters “concealed and denied the process ... in favour of a perfected product” (Bolter & Grusin, 2000, p. 25). The same can be said of computer graphics artists such as Roman. 3D animators get mathematically perfect perspective, shading and shadows “for free”, but they still have to work hard to hide the constructed nature of the image – i.e. the fact that it was created on a computer – from a viewer. Default Whippet took hundreds of hours to make – but it would need many more hours of work before a viewer could mistake it for live action photography.

Bolter and Grusin point out that when an artist is skilled and hard-working enough to successfully hide the process of production from a viewer, the irony is that their expertise goes unnoticed (Bolter & Grusin, 2000, p. 25). This potential lack of recognition might be the impetus behind some of the many “making of” videos shared by 3D artists.

Showing the making process

Currently there are countless 3D animation “making of” videos (including modelling, rigging and texturing reels) available on YouTube and Vimeo (see e.g. Figures 2.11 a, b and c). Created and uploaded by large production teams as well as individual users, these videos are not considered finished works in their own right but are designed to reveal to viewers the process of production behind a finished work. These videos are often interesting because they are proof that the images were computer generated. Their popularity might also reflect a fascination with seeing work in which actions and decisions made throughout production are evident. Roman’s “making of” video, along with his finished film, are available on the internet (Roman, 2010; see Figure 2.12). He has also published a book, The Third and The Seventh; From Bits to the Lens, which describes his production process in pictures and in words (Roman, 2013).

In their 2010 paper, “A Theory of Digital Objects”, Kallinikos, Aaltonen and Marton define digital objects as “digital technologies and devices and digital cultural artefacts such as music, video or image” (Kallinikos, Aaltonen, & Marton, 2010). With this definition in mind we can see that digital artists (including 3D animators) are simultaneously users (consumers) and producers of digital objects.

Whenever we use a computer we are using a variety of digital objects including (but not limited to) a computer operating system, a specific software application and that application’s various tools. Whether making a 3D animation using 3D software or viewing the resulting animation, the logic of media transparency is therefore equally relevant. 3D animators such as Roman aim for a level of finish that renders the digital medium transparent to a viewer of his work and, according to Bolter and Grusin, the trajectory of software interface design reveals the same aim (Bolter & Grusin, 2000, p. 31). Bolter and Grusin explain that the replacement of a text-based interface with a graphical user interface (GUI) was the result of an ongoing quest to make digital media transparent; the aim is for an interface that makes the user feel that they are not confronting a medium at all (Bolter & Grusin, 2000, pp. 32–33). Recent incarnations of this quest speak of a “Natural User Interface” (Boulos et al., 2011; Bruder, Steinicke, & Hinrichs, 2009; Lee, 2016; Park, Lee, Lee, Chang, & Kwak, 2016; Petersen & Stricker, 2009; Subramanian, 2015).

Towards a transparent interface

In 3D animation, the trend toward a transparent interface becomes evident when we compare activities (such as the text based entry and manipulation of mesh coordinates) performed by the CG pioneers described above, with contemporary mesh-creation tools.

As mentioned above, ZBrush is a contemporary 3D package which allows users to interactively “sculpt” virtual geometry in a manner that feels “natural” (Pixologic, 2015). The makers of ZBrush urge users to “Leave technical hurdles and steep learning curves behind, as you sculpt and paint with familiar brushes and tools” (Pixologic, 2015). Rather than interacting primarily via a keyboard or even a mouse, 3D sculpting in ZBrush is often done with a stylus which is held like a pencil. The ZBrush interface, even more than most 3D packages, is based on traditional painting and sculpting metaphors.

The logic behind “artist-friendly” software (see Autodesk, 2016; Pixologic, 2015; Sadeghi, Pritchett, Jensen, & Tamstorf, 2010), such as ZBrush, is to hide algorithmic workings from a user so that the software feels intuitive and easy to use. As explained on the ZBrush website, “ZBrush creates a user experience that feels incredibly natural while simultaneously inspiring the artist within. With the ability to sculpt up to a billion polygons, ZBrush allows you to create limited only by your imagination” (Pixologic, 2015). Similar sentiments are expressed by other 3D software manufacturers. In a recent promotional video by Autodesk called, "Imagine, Design, Create!”, a young man enthusiastically describes Autodesk products as the bridge between what you imagine and what you make (Autodesk, 2012). The bridge metaphor suggesting that the software provides a transparent or neutral passage between imagining something and making it happen.

Claims made by ZBrush and Autodesk seem to reflect the ultimate dream of a completely transparent medium, i.e. a medium that doesn’t interfere with an artist’s intention or ideas.

But could such a medium ever really exist? And would we want to use it even if it did? O’Reilly and Hilleli’s work suggests that their answer to these questions would be “no”, because neither of these artists approach their medium as if it were a neutral facilitator, or simply a means to a preconceived end. The distinctive visual styles achieved by O’Reilley and Hilleli have evidently emerged through an appreciation of properties inherent in the software. In other words, it is through an exploration of possibilities suggested by the medium itself that they have each managed to develop a unique voice.

The glitch aesthetic

Instead of seeking media transparency, there are many digital artists who celebrate the ways in which digital media fails to disappear. These artists want to expose the (modernist) myth of media transparency by embracing accidents, unexpected outcomes and computer glitches. One such artist is Curt Cloninger who insists that a computer glitch can be political because “it reminds us that technologies are not neutral tools, but rather are symptoms of our worldview and cultural norms” (Cloninger, 2014, pp. 13–14). Cloninger defines a glitch as either an interruption in a system or as an exposure of that system (Cloninger, 2014, p. 13). Like other proponents of Glitch (Fell, 2013; Menkman, 2011), Cloninger is interested in how the contours of a system are revealed upon its interruption. He draws upon Heidegger’s philosophy to conceptualise the interplay between interruption and revelation.

While many philosophers emphasise the human capacity for rational reflection, Heidegger points out that in our normal, everyday activities we are not explicitly aware of the things that we are dealing with – it is usually not until they fail us that things become entities, or objects with properties. He famously explains this by describing our typical relationship with a hammer. In use, the hammer usually goes unnoticed and, in a manner of speaking, the hammer is transparent because we “see through” the hammer to the task beyond. For example, when hammering a nail, we are likely to be focused on a task such as hanging a picture; we are unlikely to be focused on the hammer itself (i.e. we don’t explicitly notice its shape, its weight or the colour of its handle). It is only if the hammer breaks or if we miss the nail and hit our finger that the hammer becomes an object that we are explicitly aware of. Heidegger called the way of being of the unnoticed hammer in use, “ready-to-hand” (Heidegger, 1962, pp. 98–99) and the surprising hammer or broken hammer (which becomes an object of attention or deliberate theoretical enquiry) “present-at-hand” (Heidegger, 1962, p. 101). In his 1927 book Being and Time, Heidegger says “The ready-to-hand is not grasped theoretically at all ... The peculiarity of what is proximally ready-to-hand is that, in its readiness-to-hand, it must, as it were, withdraw in order to be ready-to-hand quite authentically” (Heidegger, 1962, p. 99). Heidegger suggests that our ready-to-hand relations with things are different from, and prior to, a conscious analysis of them.

If they are working well, tools tend to recede from conscious experience.

Another feature of tools is that we can’t really think of them as isolated entities. For example, a hammer is only a hammer because there are nails. Tools belong to a system, network or “totality” of equipment (Heidegger, 1962, p. 97).

Following Heidegger’s explanation, Cloninger reasons that “the contours of the system are revealed upon its interruption” (Cloninger, 2014, p. 14). When the system breaks, it’s like the revelation of the broken (present-at-hand) hammer. This revelation (e.g. noticing the hammer) makes us look at everything afresh; all the things with which we have been unconsciously interacting. “Heidegger’s broken hammer causes us to stop and examine the entire world ... a world we had been using implicitly, a world with which we were entangled unawares” (Cloninger, 2014, p. 14). Along with co-author Nick Briz, Cloninger’s 2014 publication Sabotage! Glitch Politix Man[ual/ifesto] is a call to break hammers so that we become aware of the technologies that we usually interact with and take for granted.

In “A Theory of Digital Objects”, Kallinikos et al. outline several features that make digital objects distinctly different from physical objects. One of these defining features is that digital objects are editable. This means that it is always possible to act upon and modify them (at least in principle). Another feature is that digital objects are interactive, which means that they offer users (or viewers) choices of how to act (e.g. navigating a website or choosing items from 3D software menus). Another feature discussed in this paper is openness, which means that “digital objects are possible to access and to modify by means of other digital objects, as when picture-editing software is used to bring changes to digital images” (Kallinikos et al., 2010).

Given that editability, interactivity and openness are qualities inherent in digital objects, it is interesting to note that I feel confined, reduced and constrained by standard approaches to 3D software. Digital artist Rosa Menkman suggests that this sense of confinement is linked to media transparency. In her online publication “Glitch Studies Manifesto”, she writes that “The quest for complete transparency has changed the computer system into a highly complex assemblage that is often hard to penetrate and sometimes even completely closed off” (Menkman, 2010b, p. 3). In her manifesto Menkman says that “The glitch is a wonderful experience of an interruption that shifts an object away from its ordinary form and discourse. For a moment I am shocked, lost and in awe” (Menkman, 2010b, p. 5). Menkman suggests that computer glitches encourage us to see that the typical use of a computer is based on a “genealogy of conventions”, while in reality computer software can be used in many different ways (Menkman, 2010b, p. 3).

Glitches as a creative opportunities

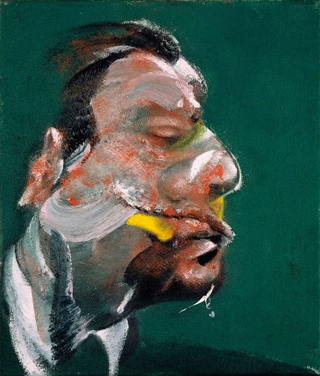

Like Cloninger, Menkman’s work contests the modernist ideal of a transparent medium and celebrates the ways that media fail to disappear. Computer software is a medium which modulates data, and Menkman’s work plays with the modulation of data so that, in addition to seeing through the medium to what it represents, the viewer is also aware of the medium itself. Menkman uses computer glitches as opportunities, creating intriguing images. As described by Kallinikos et al., digital objects are inherently open; they can be accessed and modified using other digital objects. Menkman explores this feature of digital objects in A vernacular of file formats: A Guide to Databend Compression Design (Menkman, 2010a). Menkman illustrates this document with a series of portraits, some of which are pictured below (Figure 2.14). These images have been created by altering the header information on RAW image files before opening them in Photoshop, a process which Menkman calls databending.

While some people experience technical bugs or imperfections as negative, Menkman emphasizes “the positive consequences of these imperfections by showing the new opportunities they facilitate” (Menkman, 2010b, p. 2). Menkman insists that visual artefacts resulting from technical bugs and mistakes are creative opportunities; she appreciates glitches in the same way that a painter might appreciate splashes or dribbles of paint.

Becoming alive to the accident

Like Menkman, Francis Bacon values the capacity for his medium (paint) to continually surprise (Sylvester, 1975), and he evidently values an artist’s capacity to respond to surprises more than their capacity to entirely control a practice situation (Sylvester, 1975).

Bacon praises the work of 17th century painter Rembrandt van Rijn for his “profound sensibility, which was able to hold onto one irrational mark rather than onto another” (Sylvester, 1975, p. 58). Although using traditional painting tools, Bacon values accidents because they disrupt what he can do with ease and help him to avoid habitual and conventional practices; they help him to avoid illustration and sometimes help him achieve something more “poignant” and “more profound that what [he] really wanted” (Sylvester, 1975, p. 17).

Counter to a Technical Rationalist approach, for Bacon, it’s important not to proceed in an entirely formulaic or rational manner. For Bacon, becoming a skillful practitioner means that “One possibly gets better at manipulating the marks that have been made by chance, which are the marks that one made quite outside reason … one becomes more alive to what the accident has proposed for one” (Sylvester, 1975, p. 53).

The “profound sensibility” cherished by Bacon is evident also in the work of Menkman, O’Reilley and Hilleli, because using commercial software in new ways means being open and responsive to the surprising opportunities that it affords.

In his book Heidegger, Art and Post-Modernity, Heidegger scholar Iain Thomson contrasts the challenging-forth of Enframing (which Thomson calls technological revealing) with bringing-forth or poetic revealing (Thomson, 2011, p. 21). While challenging-forth imposes a predefined framework, bringing-forth allows things to reveal themselves (somewhat) on their own terms. Thomson suggests that poetic revealing is illustrated in the comportment of a skilled woodworker who studies a piece of wood and, developing a feel for its inherent qualities, allows a sculptural form to emerge through an improvisational process. In contrast to the sensitivity of the woodworker, Thomson imagines an industrial factory indiscriminately reducing pieces of wood to woodchips and then recomposing them to form straight, flat units of timber ready for use in the building industry. As standardised sheets, the wood has become standing-reserve, “optimized, enhanced, and ordered for maximally flexible use” (Thomson, 2011, p. 19). The factory indiscriminately imposes a predefined framework, order or form onto individual pieces of wood. By contrast, the woodworker works with qualities inherent in each particular piece of wood and ends up with an outcome that is context specific and could not have been known in advance. Importantly, the woodworker is aware that there are multiple appropriate ways to respond the wood’s inherent qualities, but the skilled carpenter is also aware that not any response will do. For Thomson, the industrial factory is an example of “the obtuse domination of technological Enframing”, while the woodworker’s comportment illustrates “the active receptivity of poetic dwelling” (Thomson, 2011, p. 21).

There are similarities between Bacon’s profound sensibility and Heidegger’s descriptions of bringing-forth, because both emphasise our ability to respond to glimmers of meaning or felt significance.

Thomson calls this approach to things a comportment of “active receptivity” (Thomson, 2011, p. 21); he also describes it as a “phenomenological comportment” (Thomson, 2011, p. 20).

Context of practice: Conclusion

Technical Rationality is the dominant model implicit in contemporary 3D software, with CG researchers continuing to provide increasingly accurate (i.e. “realistic”) and efficient representational solutions for use by 3D practitioners. But artists such as O’Reilly, Hilleli and Menkman don’t simply accept these solutions at face value. O’Reilly’s work explicitly disrupts predesigned solutions and, rather than seeking media transparency (either in their tools or in finished works), O’Reilly and Hilleli each explore qualities inherent in 3D software.

Not limited to 3D software, Menkman’s work responds to qualities inherent in digital media more generally. Exploring digital media as modulated data, she responds to accidental (and unexpected) outcomes, embracing them as creative opportunities.

Rather than necessarily seeking a straightforward, efficient and transparent process, these artists explore opportunities arising from the process itself. Exploring qualities inherent in their medium and responding to unexpected outcomes are creative strategies which allow these artists to take a fresh approach to their work.

These strategies could be regarded as conscious or unconscious attempts to escape Enframing and to foster a comportment of active receptivity; a profound sensibility which is able to hold onto one irrational mark rather than onto another.

The diversity of approaches to digital media and 3D software discussed in this section indicates that, just as important as the particular technological artefacts/devices that we use is our approach to technology. As we will see in the next section, this emphasis on approach, attitude and comportment accords with Heidegger’s thought.